This week’s exercise was about crime mapping, specifically

hotspot analysis. We became familiar with various techniques commonly used in

crime analysis, and we learned to aggregate crime events to calculate crime

rates. We examined spatial patterns and socio-economic characteristics in crime

rates. We also learned about global and local spatial clustering methods, and

we compared the reliability of hotspot mapping in crime prediction.

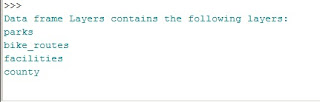

In the first part of the assignment, we were to determine if

a relationship between soci-economic variables and residential burglaries

existed. From the crime dataset provided, I used a SQL query to select only

residential burglaries, and joined that data spatially with census data. Using a

table join with the demographic data allowed me to calculate the crime rate per

1,000 units. From here, I created a choropleth map of residential burglaries

per 1,000 housing units by census tract, and I also created a choropleth map of

the % of housing units that were rented per census tract. Finally, I created a

scatterplot of % housing units rented vs. the number of burglaries per 1,000

housing units.

The second part of the assignment introduced us to kernel

density hotspot mapping, which is a technique commonly used to show clustering

of point events. Although this technique does not show the statistical

significance, it does show hotspots in a visually pleasing manner. One has to

be careful, however, to choose a reasonable bandwidth, as this is what

corresponds to the amount of smoothing. If the bandwidth is too large, there

will be too much smoothing and you will lose details in the map. If the

bandwidth is too small, there will be not enough smoothing. Here, we want a

kernel density map of auto thefts with a bandwidth of 0.5 miles and a cell size

of 100 feet (as the cell size needs to be much smaller than the bandwidth). As I

wanted to use the average density as a classification, I excluded any values of

“0”, determined the mean density, and classified the data as multiples of the

mean (2 * mean, 3 * mean, etc.) to create the hotspot map.

The third part of the assignment was to create 3 hotspot

maps using different techniques and to compare them. The first method was

grid-based hotspot mapping. I performed a spatial join between the burglaries

and grids layers, which created a count field displaying the number of

burglaries in each grid. I then manually selected the top 20% (the top

quintile) and dissolved them to form a single polygon, which became my hotspot

map. The second method was a kernel density hotspot map similar to that in the

second part of the assignment. I chose the same bandwidth (0.5 miles) as a

bandwidth. To classify the data, I excluded any areas with a value of “0” and

used the Reclassify tool so only areas greater than 3 times the mean density

would be displayed as part of the hotspot map. The third type of hotspot map was

created using the local Moran’s I technique. This technique uses crime counts

or rates aggregated by meaningful boundaries. I created a spatial join between

block groups and 2007 burglaries, and calculated the crime rate (# of

burglaries per 1,000 housing units). I ran the Local Moran’s I tool in ArcMap,

which gave different types of spatial clusters. As this is a hotspot map, I

selected only those areas with “high-high” clusters, meaning high incidents of

burglaries next to other areas with high incidences of burglaries. To compare

these three types of hotspot maps, I created a map that is shown below

displaying all 3 hotspot maps on the same data frame (I used various levels of

transparency so all are visible).

Next we were to determine the reliability of the hotspot

maps to predict crime. To do this, I used burglary data from 2008 (the hotspot

maps used 2007 data). I determined the total area of the 3 hotspot areas and

the number of 2008 burglaries within the hotspot areas. From this, I calculated

the % of 2008 burglaries within the 2007 hotspots and the crime density. The grid-based

overlay method showed the highest percentage of 2008 burglaries in the 2007 hotspot

area, but the hotspot map was also spread over a larger area. The kernel density

method showed a smaller percentage of 2008 burglaries in the hotspot area, but

the total hotspot area was much smaller and the crime density was higher, so in

my opinion this is the better analysis for predicting future crime, at least in

regards to deployment of more officers to the area. There would be a greater

number of officers in a smaller area (as compared to the other hotspot maps),

so there would be a shorter response time to calls and backup would be quicker

to the scene, if necessary. The Local Moran’s I method showed a lower

percentage and lower crime density.

This was really an interesting assignment. I have some

experience with criminal justice courses, but have never worked with crime

mapping or hotspot analysis at all. I found in interesting to learn about the

various mapping methods and the pros and cons of each type. I also found it

helpful to think about which analysis is better in terms of where officers are

needed and how fast they could likely get to an incident.