In this lab, we carried out a building damage assessment

based on aerial imagery taken before and after Superstorm Sandy impacted the

northeastern United States. Although not especially powerful as far as some

hurricanes can be, the extreme size of the storm, the fact that it collided

with a powerful weather system moving into the northeast, and its impact on

several of the U.S.’s largest cities (including a historic storm surge),

combined to make it the second costliest cyclone to hit the United States since

1900.

First I created a base map using the World Countries, US

boundary, and FEMA States shapefiles. The FEMA shapefile was created from a

selection of impacted states from the US boundary shapefile. After importing

the Sandy storm track table, I created a polyline showing the storm’s path from

the original hurricane track points. I learned to create marker symbols, and

symbolized the hurricane with a hurricane symbol. I used a color scheme from

green to red, with green showing where Sandy was less powerful and red at the

storm’s most powerful, in this case a Category 2 hurricane. I labeled the

hurricane track points and added graticules and the essential map features. Below

is my final map.

Next I needed to prepare the data for the damage

assessment. This was achieved by creating a new file geodatabase and new raster

mosaics, one for the pre-storm imagery and one for the post-storm imagery. I briefly

learned to compare the before and after imagery by learning the Flicker and

Swipe tools in the Effects toolbar. One of the most important aspects of the

assignment was creating attribute domains, which are used to constrain values

allowed in an attribute table or feature class. Shown below is a screenshot of

domains I created for the damage assessment. Damage ranges from 0 (no damage)

to 4 (total destruction).

I added the county parcels and the study area layers to our

map. This part was quite time consuming, as I had to digitize each structure

from the pre-storm image within the study area, and then compare the pre-storm

and post-storm imagery to determine the level of damage the structure received.

This was often difficult to determine, as I was using aerial imagery and it

was difficult to see much of the damage unless it was very severe. It was also

often difficult to determine the building type (residential, commercial, or

industrial).

Next I wanted to examine the relationship between the

locations of the damaged structures and their proximity to the coastline. I digitized

the coastline near the study area and used Select by Location to determine the

number of damaged structures within 100 m, 100-200 m, and 200-300 m from the

coastline. As one would imagine, structures closer to the coastline were more

likely to be destroyed or suffer catastrophic damage, and those further from

the coastline were more likely to suffer little to no damage. Structures blocked

from the winds and/or water had a better chance of surviving intact as well.

Below is a table of the structural damage.

Count of structures within distance categories from the coastline

|

Structural Damage Category

|

|

|

|

|

|

0-100 m

|

100-200 m

|

200-300 m

|

|

No Damage

|

0

|

0

|

7

|

|

Affected

|

0

|

6

|

7

|

|

Minor Damage

|

0

|

26

|

22

|

|

Major Damage

|

2

|

6

|

2

|

|

Destroyed

|

10

|

10

|

4

|

|

Total

|

12

|

48

|

42

|

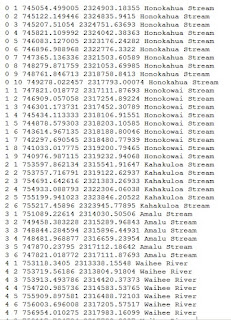

I used the Attribute Transfer tool to copy the attributes

from the county parcels layer to the structure damage layer. To populate the

new fields, I manually matched the parcel to the damaged structure point. Once I

had done this for all the damage points, I exported the data to an Excel

spreadsheet and labeled the points. The purpose of this was to help determine

who owns the damaged structures for emergency management and insurance

purposes.

This lab seems to cover most of what damage assessment is

used for. Although manually digitizing is rather tedious, it helps determine

the extent of the damage. In a real situation however, I would be more

comfortable creating the initial assessment using aerial imagery, but actually

going through the area to visually confirm the assessment values would be

important as well.